Artificial intelligence (AI) has been a topic of interest for several decades, and its development has been shaped by various factors throughout history. From the early beginnings of machine learning to the current advancements in deep learning, AI has evolved significantly over the years. In this article, we will provide an overview of the historical development of AI, highlighting the key milestones and technological advancements that have contributed to its growth.

Early Beginnings: 1950s-1960s

The term “Artificial Intelligence” was coined in 1956 by John McCarthy, a computer scientist who organized the first AI conference at Dartmouth College. However, the concept of AI dates back to ancient Greece, where myths and legends described machines that could think and act like humans. In the early years, AI research focused on developing rule-based systems, which were simple algorithms that could perform tasks based on predefined rules.

One of the most significant events in the early history of AI was the development of the Logical Theorist (LTP) by Allen Newell and Herbert Simon in 1956. LTP was a computer program that could simulate human problem-solving abilities, marking the beginning of the field of artificial intelligence.

Rule-Based Expert Systems: 1970s-1980s

In the 1970s and 1980s, AI research shifted towards rule-based expert systems, which were designed to mimic human problem-solving abilities. These systems used a set of rules to reason and make decisions, and they became widely used in various industries, including banking, healthcare, and finance.

One of the most notable examples of an expert system is Mycin, developed in 1976 by Edward Feigenbaum and his team at Stanford University. Mycin was a medical diagnosis system that could identify and classify different types of bacterial infections based on symptoms and patient data.

Machine Learning: 1990s-Present

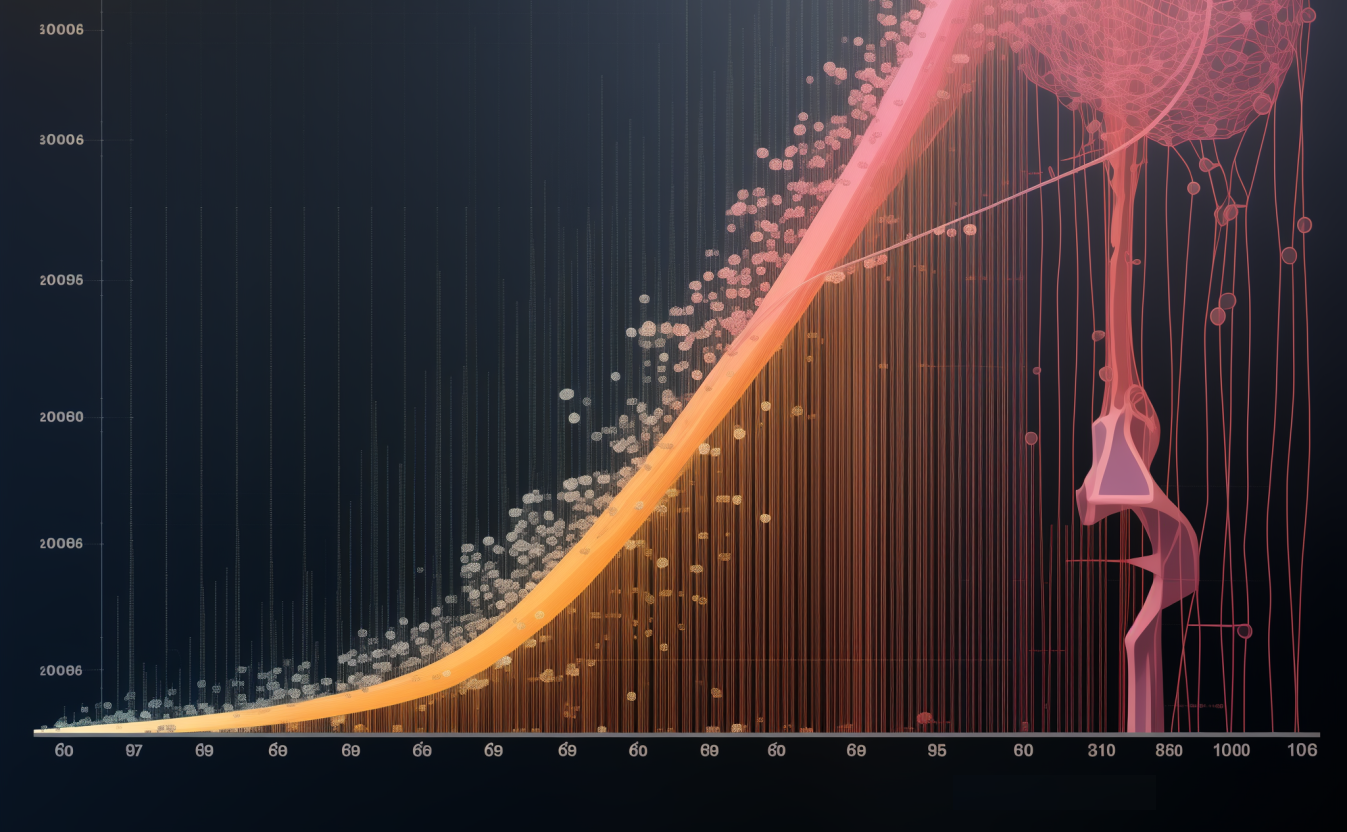

In the 1990s, machine learning emerged as a new approach to AI. Machine learning algorithms enable computers to learn from data without being explicitly programmed. This technology has led to significant advancements in image and speech recognition, natural language processing, and other areas of AI research.

The development of deep learning algorithms in the early 2000s further accelerated the progress of AI. Deep learning models use multiple layers of artificial neurons to learn complex representations of data, enabling them to perform tasks such as image recognition, speech recognition, and natural language processing with unprecedented accuracy.

Current Trends and Future Directions

Today, AI is rapidly transforming various industries, including healthcare, finance, transportation, and education. The development of self-driving cars, personalized medicine, and intelligent tutoring systems are just a few examples of how AI is revolutionizing the way we live and work.

Looking ahead, the future of AI research is likely to be shaped by several emerging technologies, including:

- Edge AI: With the proliferation of IoT devices and the growth of cloud computing, there is a growing need for AI models that can run on edge devices with limited computational resources. Edge AI refers to the use of AI techniques at the edge of the network, closer to the data source.

- Quantum AI: The development of quantum computing has opened up new possibilities for solving complex optimization problems and simulating complex systems. Quantum AI refers to the use of quantum computers to perform AI tasks more efficiently than classical computers.

- Transformers: Transformer models, which use self-attention mechanisms to process sequential data, have enabled significant improvements in natural language processing tasks like machine translation and text summarization.

- Explainability and Interpretability: As AI systems become more ubiquitous and influential, there is a growing need to understand how they make decisions. Explainability and interpretability techniques aim to provide insights into the inner workings of AI models.

Conclusion

The development of artificial intelligence has been a gradual process, shaped by various technological advancements and societal factors throughout history. From early beginnings in rule-based systems to the current era of deep learning and quantum AI, AI has evolved significantly over the years. As AI continues to transform various industries and aspects of our lives, it is essential to understand its historical development and the key milestones that have contributed to its growth.